Welcome, data enthusiasts, to the exhilarating world of cross validation! In this article, we'll explore how to make your data do crossfit through the powerful technique of cross validation. Get ready to take your model training to the next level and unleash the full potential of your data. Let's dive in!

Introduction to Train-Test Split: A Good Start 📊

When developing machine learning models, it's common practice to split our data into two sets: a training set and a test set. The training set is used to train the model, while the test set is used to evaluate its performance on unseen data. This approach, known as train-test split, provides a simple way to gauge how well our model generalizes.

The Power of Cross Validation: Raising the Bar ✨

While train-test split is a valuable technique, it has limitations. The performance of our model on the test set may be overly optimistic or pessimistic due to the specific split of the data. Enter cross validation, a technique that takes model evaluation to the next level.

Cross validation involves dividing the data into multiple folds and iteratively training and testing the model on different subsets. The most common type of cross validation is k-fold cross validation. Here's how it works:

- Split the data into k equal-sized folds.

- Train the model on k-1 folds and evaluate it on the remaining fold.

- Repeat this process k times, each time using a different fold as the test set.

- Calculate the average performance across all k iterations.

Why Cross Validation is Better: More Reliable Results 🎯

Cross validation offers several advantages over train-test split:

- Better Utilization of Data: Cross validation ensures that every data point is used for both training and testing, maximizing the use of available information.

- Reduced Variability: By averaging the results over multiple iterations, cross validation provides a more stable and reliable estimate of model performance.

- Fair Comparison of Models: Cross validation allows for a fair comparison of different models, as they are evaluated on the same subsets of data.

- Hyperparameter Tuning: Cross validation is often used to fine-tune hyperparameters, helping us find the optimal configuration for our models.

Cross Validation for Time Series Data: A Time Travel Twist ⏰

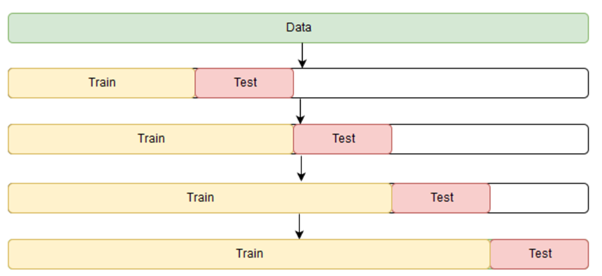

When working with time series data, such as stock prices or weather data, the temporal order of the data points is crucial. Traditional cross validation methods may introduce "time leakage," where future information leaks into the training set. To address this, we use specialized techniques like time series cross validation.

Time series cross validation involves splitting the data into sequential folds, ensuring that the training data only includes past observations, and the test data includes future observations. This preserves the temporal order and provides a more realistic evaluation of model performance in time-dependent scenarios.

Specialized Types of Cross Validation: Customized Fitness Regimens 💪

Beyond k-fold cross validation, there are specialized techniques tailored to specific situations. Here are a few notable ones:

- Leave-One-Out (LOO): Each data point serves as the test set, while the remaining points form the training set. LOO is useful when working with small datasets.

- Stratified Cross Validation: This technique maintains class proportions when splitting the data, ensuring balanced representation of different classes in both training and test sets.

- Nested Cross Validation: It combines cross validation with an outer loop for model selection and an inner loop for hyperparameter tuning. This helps prevent overfitting and provides a more robust evaluation of model performance.

Level Up Your Model Training with Cross Validation! 🚀

With cross validation, you can make your data do crossfit and extract maximum value from it. By going beyond the traditional train-test split, you obtain more reliable estimates of model performance and unleash the true potential of your models.

Remember to tailor your cross validation approach to the specific characteristics of your data, such as time series dependencies or class imbalances. Whether it's k-fold cross validation, time series cross validation, or specialized techniques, choose the regimen that best suits your needs.

So, embrace the power of cross validation, push your models to their limits, and let your data sweat it out. Prepare to achieve peak performance and conquer the world of machine learning, one fold at a time!

![[進階 js 10] 物件導向 & Prototype](https://static.coderbridge.com/images/covers/default-post-cover-2.jpg)